IS REAL-TIME PHOTOREALISTIC 3D VIEWING ON THE HORIZON?

Angela Geary responds to Michael Greenhalgh

Michael Greenhalgh’s question from the previous issue, 'Will computer modelling get better?' struck a chord with me. Like Michael, I’m exasperated with the quality of

real-time 3D representation that has been available for the visualisation of cultural heritage datasets. I too, have struggled in my attempts to represent authentic surface

texture, colour and materials of complex surfaces, on a par with the high-resolution 3D geometry that can be captured from artefacts. Obtaining such high-resolution data has

become readily achievable using standard, close-range 3D laser scanning techniques. To realise a photo-realistic result in a virtual representation, however, one has had

to rely on rendered output to still images or digital movie formats such as QuickTime® or Audio Video Interleave (AVI). QuickTimeVR® offers a noteworthy repertoire that

includes the potential for basic interactivity such as object rotation and zooming. Unfortunately, this image-based format falls well short of an ideal scenario in which

the live exploration of actual 3D geometry would be possible in conjunction with a high-quality rendered appearance.

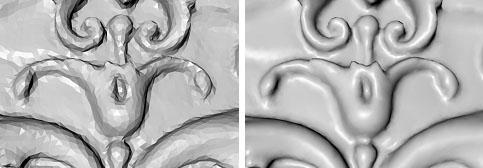

Fig. 1. Right: A model rendered with a simple Phong shader. Phong is an improvement on flat 'Gouraud' shading (left), particularly for modelling

specular reflection as it interpolates colour linearly between vertices the rather than across an entire triangle.

Virtual Reality Mark-up Language (VRML) purported to offer this functionality, yet it is very limited with respect to surface shading. VRML supports only basic shading

models such as 'Phong' (Fig. 1), it has limited potential for texture mapping and it offers no reliable integrated method for shadow projection. In practical applications, all of

these characteristics are essential to realistic representation in an interactive environment. VRML’s successor, X3D, has greater depth and flexibility in this respect;

yet, implementing features such as programmable shading can be problematic since viewing them is dependent on the limited capabilities normally found in client viewing

software, usually a web browser plug-in or a stand-alone player. Other proprietary, real-time 3D viewing formats have already come and gone. Shockwave 3D and other

applications attempted to provide cross-platform solutions to web-based 3D interaction. But although they addressed visualisation requirements with varying degrees of

success, they have not achieved the fundamental goal of real-time photorealistic representation.

High dynamic range image-based rendering and texture mapping techniques1 facilitate extraordinarily realistic polychromatic

lighting and reflection effects. Indeed, such methods represented a huge leap forward for 3D computer graphics. However, whilst research has demonstrated the possibility

of applying the stunning potential of this technique in real-time,2 it has yet to be implemented in commercially available

3D viewing environments.

The reason behind such shortcomings among currently available 3D viewing tools is neither a lack of ambition nor a miscalculation of user needs on the part of these

software developers. Until very recently, the culprit could be found in the combination of the capabilities of computer graphics processing, operating systems and 3D graphics

application programming interfaces (APIs) including Open Graphics Library (OpenGL) and Microsoft’s®

DirectX®. However, I can offer some hope that

vastly improved options for 3D visualisation may be on the not-too-distant horizon.

Computational advances, such as multiple processors, 64-bit addressing and increasingly powerful graphics cards in consumer level systems are increasing the potential

to exploit new, advanced real-time rendering features available in OpenGL 2.0. Particularly exciting is the new shading language (GLSL) functionality offered in this widely used,

cross-platform graphics API. Because sufficient processing capability has finally become available, these programmable shading features will allow real-time representation of

complex surface textures. For example, precise diffuse colour and specular colour image maps can be layered and combined with advanced lighting effects - such as bump-mapping -

to create highly realistic results. Additionally, an advanced GLSL lighting method known as ‘per pixel shading’ offers superior realism in object illumination that is both

independent of polygonal mesh resolution, and quite unlike the traditional per-vertex approach. Using these advanced features, it will soon be possible to authentically

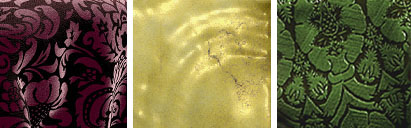

render complex and challenging materials such as velvet, carved marble or distressed gilding, in a real-time environment accessible to consumer-level computer users (Fig.2).

Fig. 2. Left: rendered simulated velvet cloth. Centre: a rendered surface with simulated gilding. Right: virtually reconstructed wax appliqué,

a relief decoration technique used in European polychrome sculpture.

These improvements in real-time rendering will help us realise our ambition to present high-fidelity 3D data in conjunction with authentic textures and material shading

in a live viewing environment. There will no longer be a specific barrier, other than our imagination, to developing a new generation of visualisation tools that can fully

represent the richness of the visual, geometric and time-based data that can be acquired from artefacts.

I've waited a long time for a truly capable tool, and in the spirit of 'if you want something done...', work on such an application is currently underway at the

University of the Arts London. As part of the SCIMod project, we are striving to develop this new visualisation environment that can meet the high-fidelity requirements of

the cultural heritage sector. For more information on SCIMod, contact Dr. Angela Geary, SCIRIA Research Unit, University of the Arts London, (a.geary AT camberwell.arts.ac.uk).

Notes:

1. Debevec, P. (1998), 'Rendering synthetic objects into real scenes: bridging traditional and image-based graphics with global illumination and

high dynamic range photography', Proceedings of the 25th Annual Conference on Computer Graphics and Interactive Techniques SIGGRAPH'98, New York, NY: ACM Press, pp. 189-198.

2. Cohen, J., Tchou, C., Hawkins, T. and Debevec, P. E. (2001), 'Real-Time High Dynamic Range Texture Mapping', Proceedings of the 12th Eurographics Workshop on Rendering Techniques, Gortler, S. J. and Myszkowski, K. (eds.), London: Springer-Verlag, pp. 313-320.

© Angela Geary and 3DVisA, 2007.

Back to contents